Chalkaat

Augmented reality laser-cutting

Sharma A., Madhvanath S., Billinghurst M. — Published at ACM ICMI

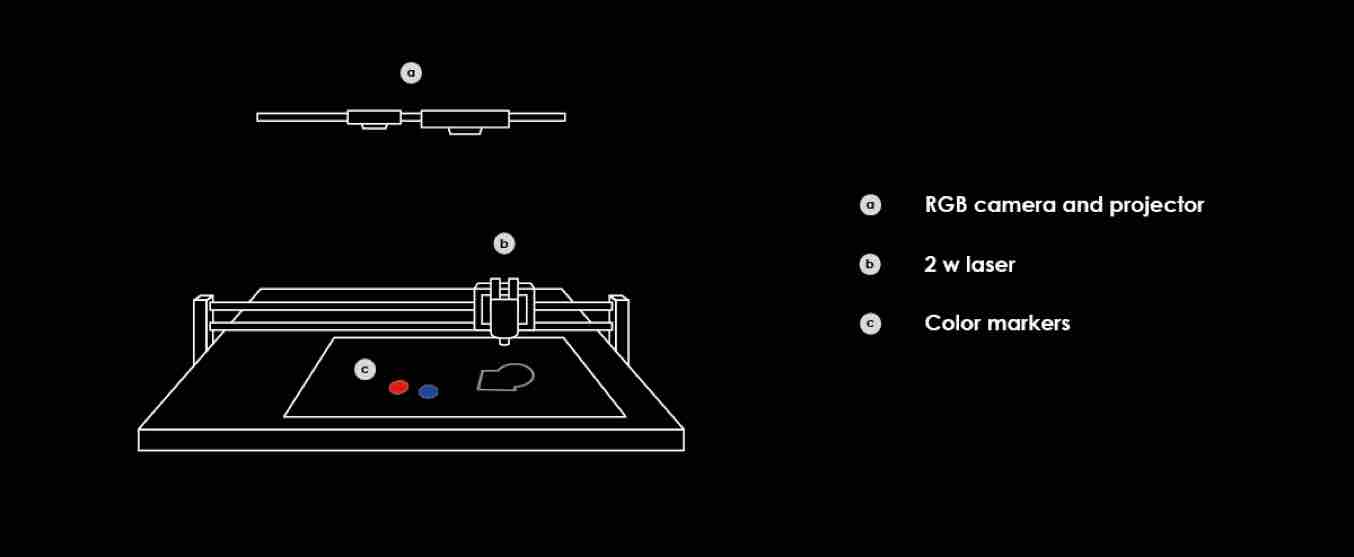

System

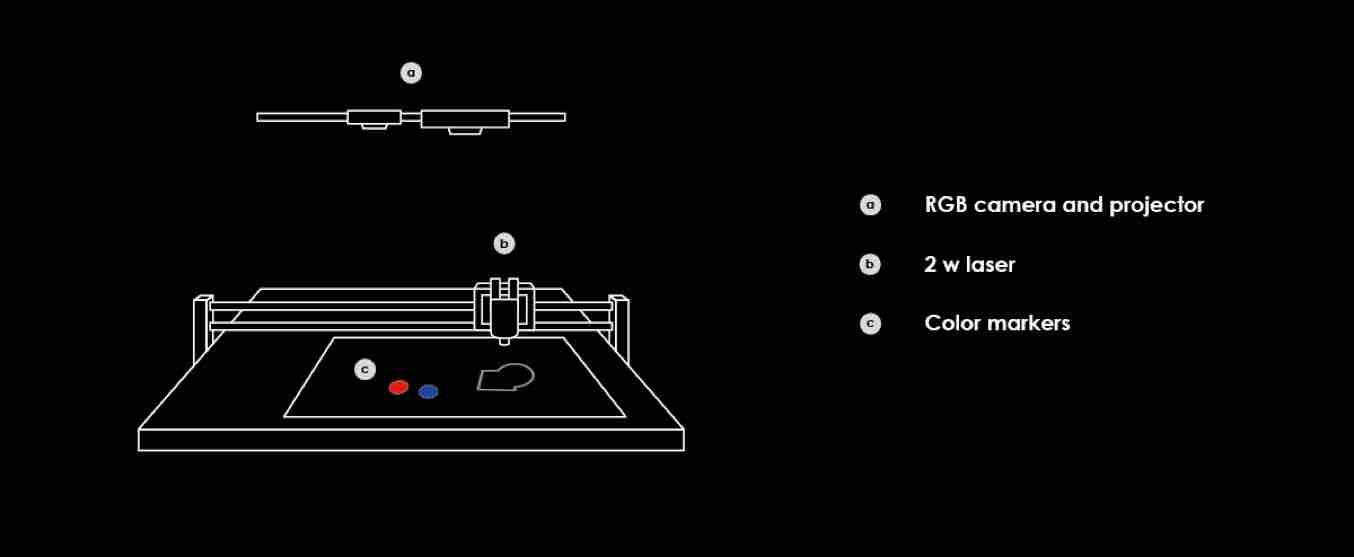

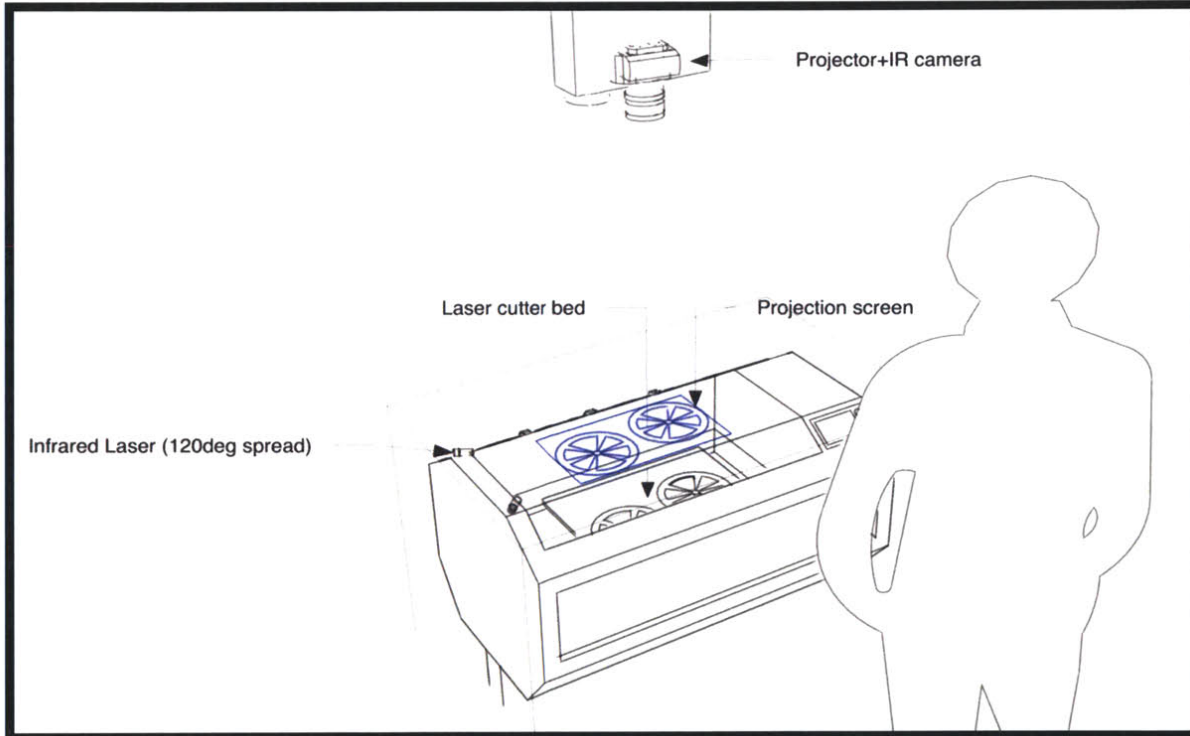

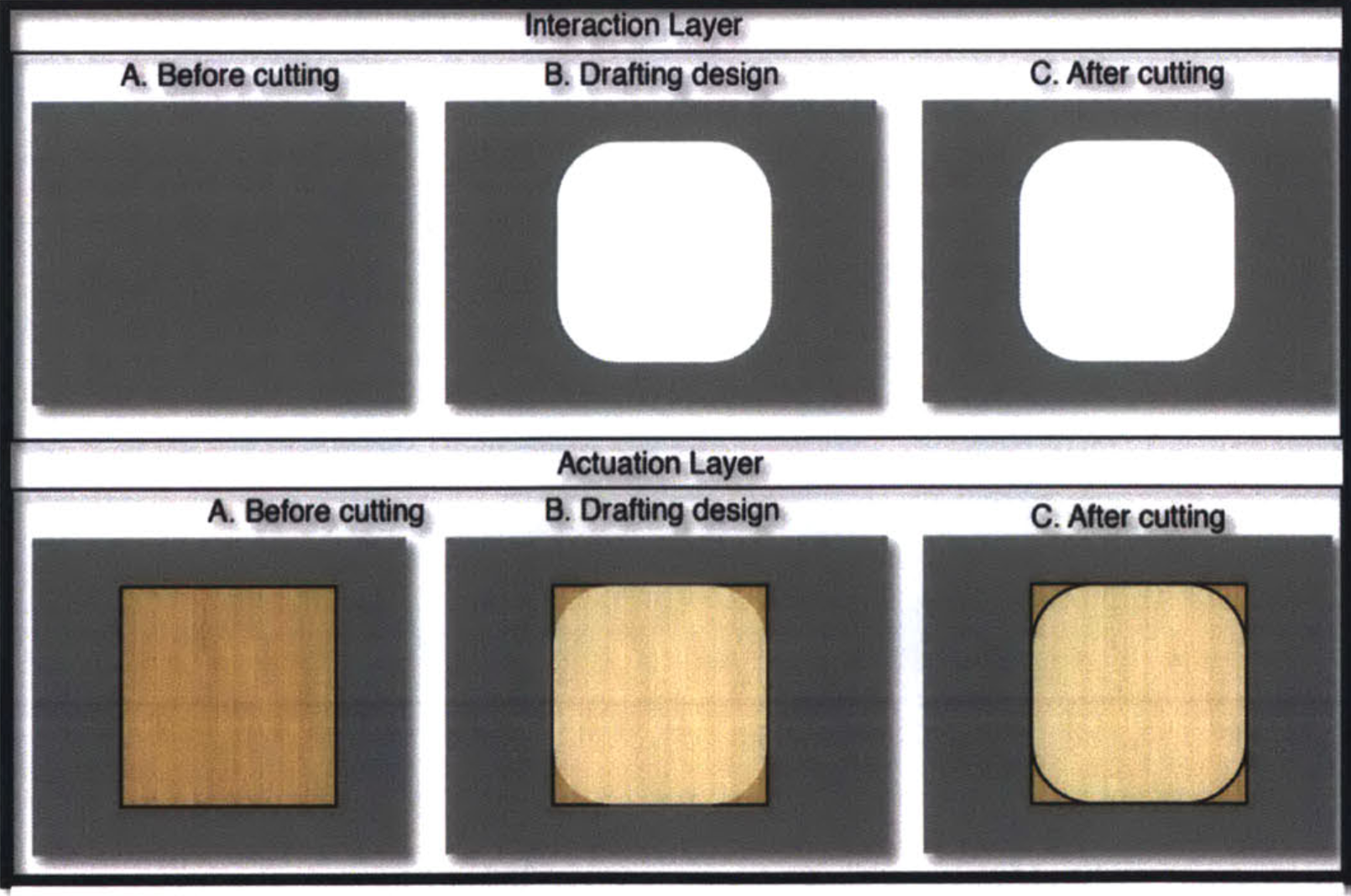

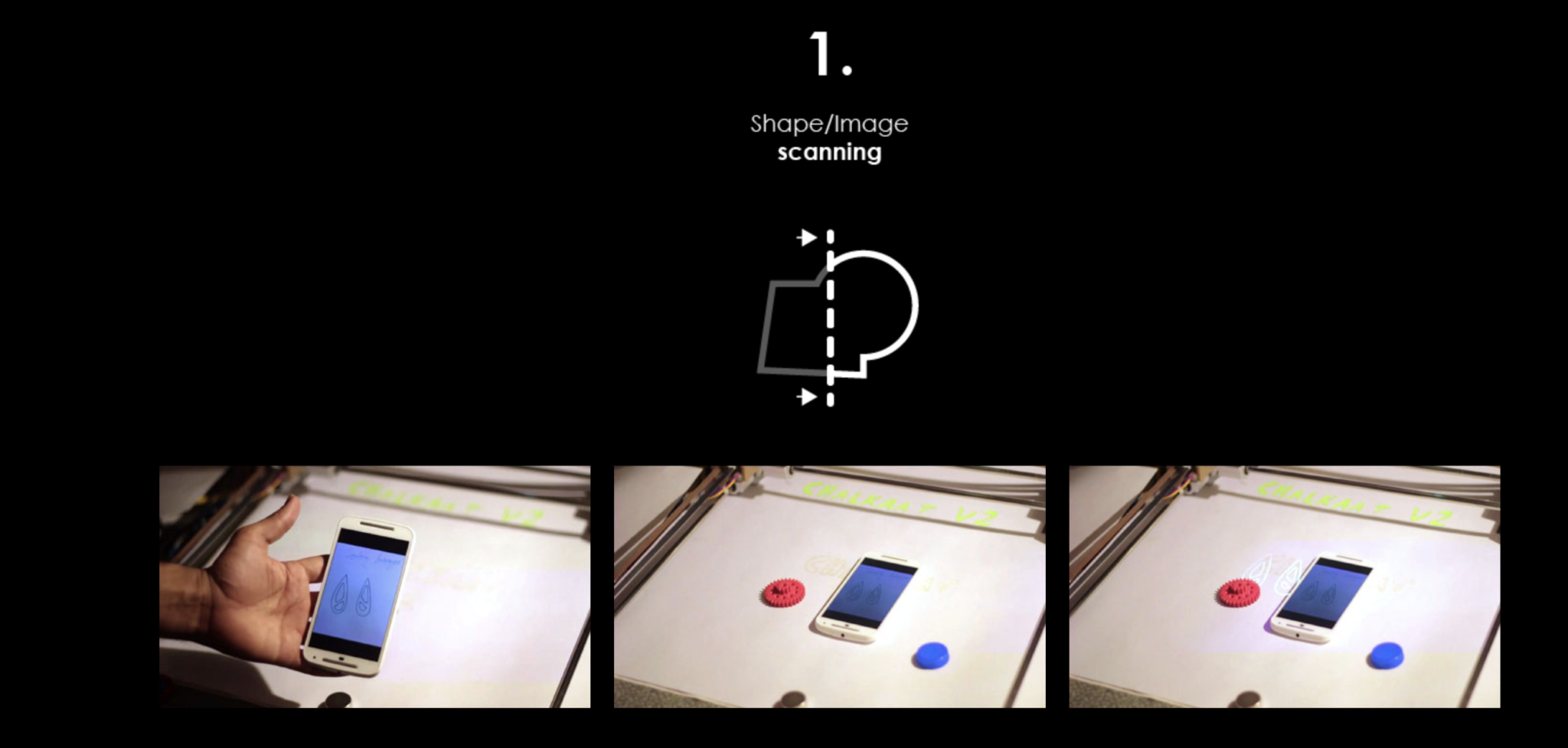

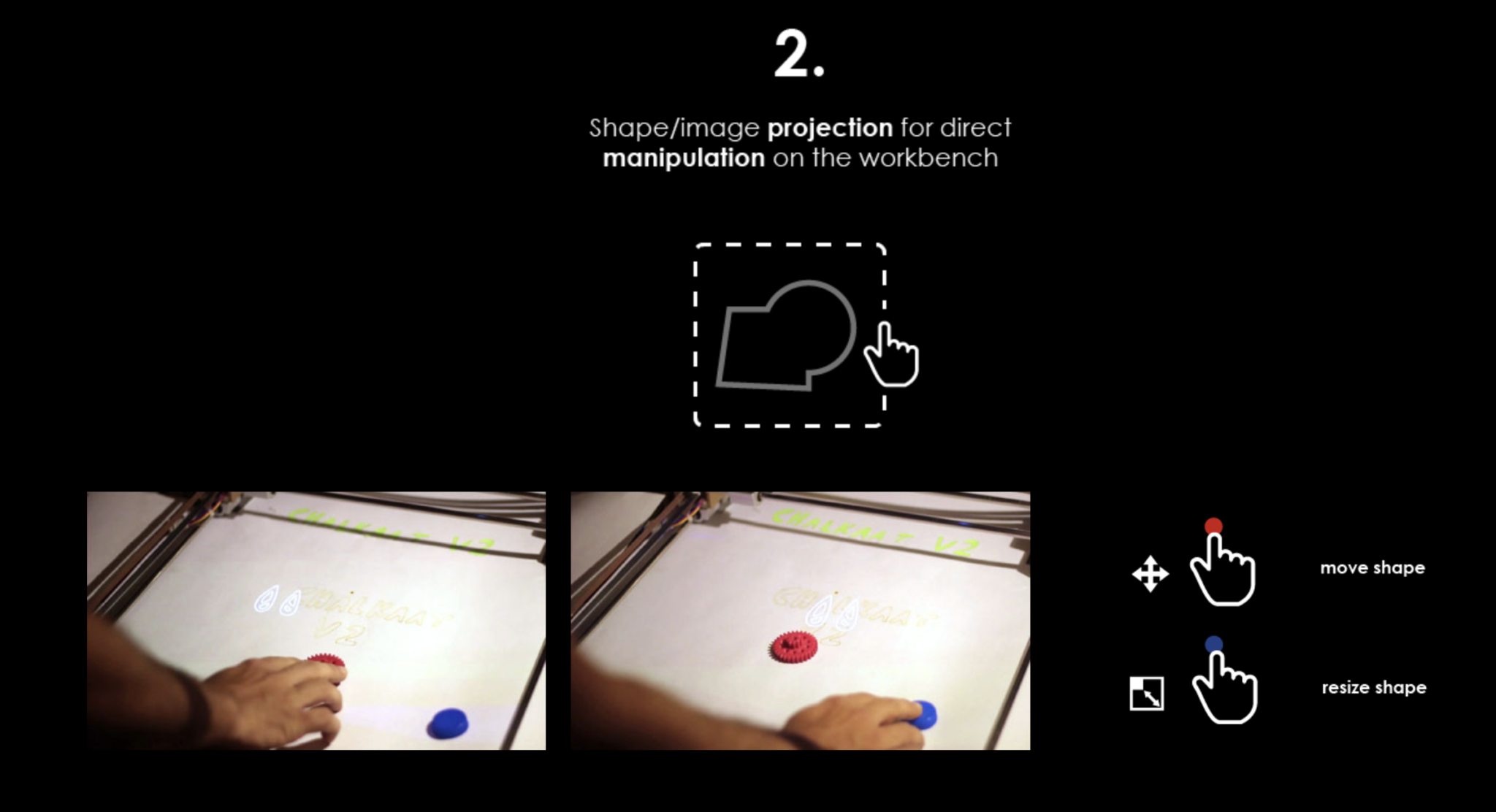

The system consists of a semi-transparent display and optical tracking interfaced with the laser cutter so that users can draw virtual graphics on top of their workpiece. The system also helps users to make virtual copies of physical artifacts, which can later be cut.

‘Computer-less’ UI for interacting with laser cutters, where the users can express themselves more directly by working directly ‘on the workpiece’. The camera on top tracks the strokes. Different colored markers allow different commands to be executed.

Problem

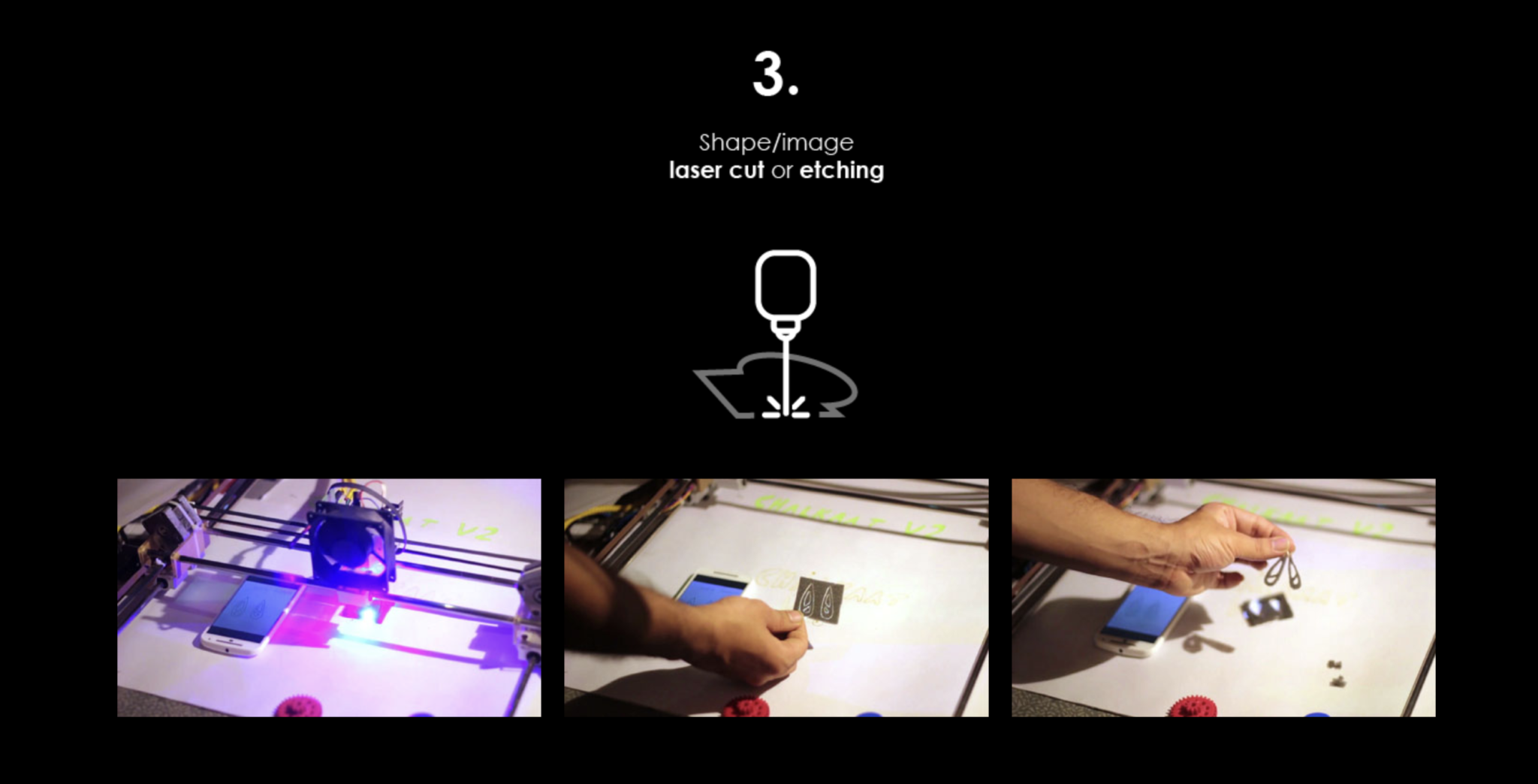

The evolving field of personal fabrication holds much potential for the coming decade and laser-cutters allow fast and high quality cutting and engraving of a wide range of materials. Our system allows for direct manipulation with laser cutters.

We prototyped the interface by building a laser cutter from the ground up, with DLP projection, computer vision and object recognition, stroke tracking.

In team with: Nitesh Kadyan, Meghana Bhat, Fabio Besti

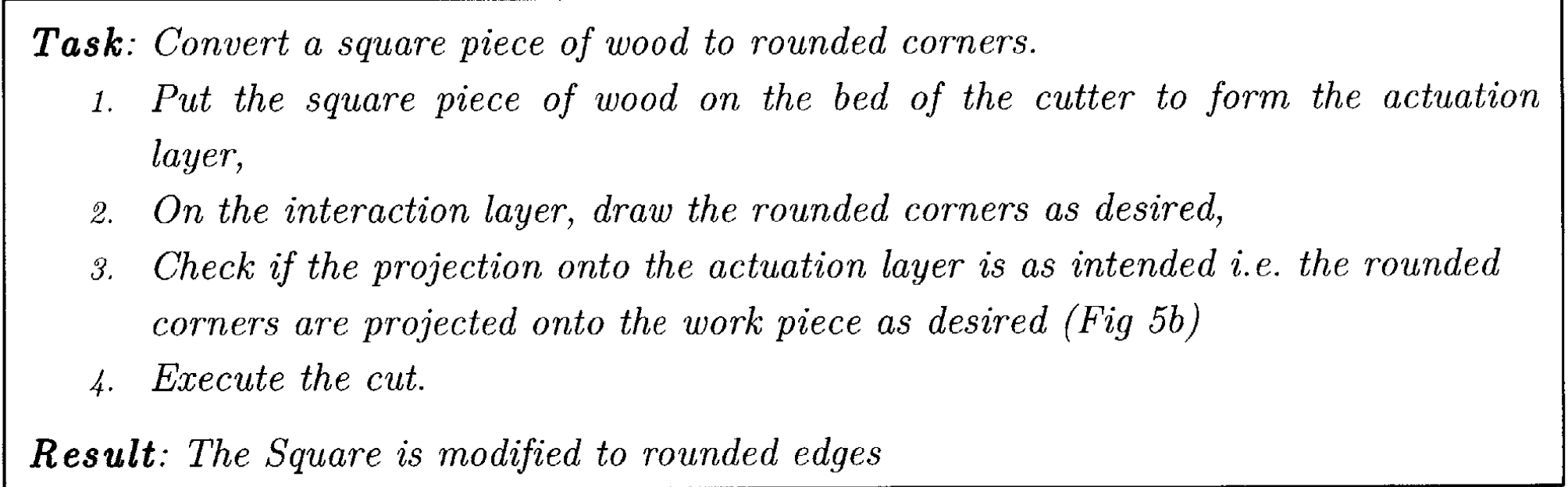

Workflow

Video