Ballshooter

Infrared multi-touch sensing for large interactive surfaces

Problem

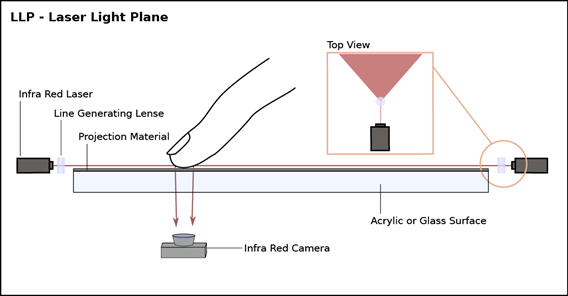

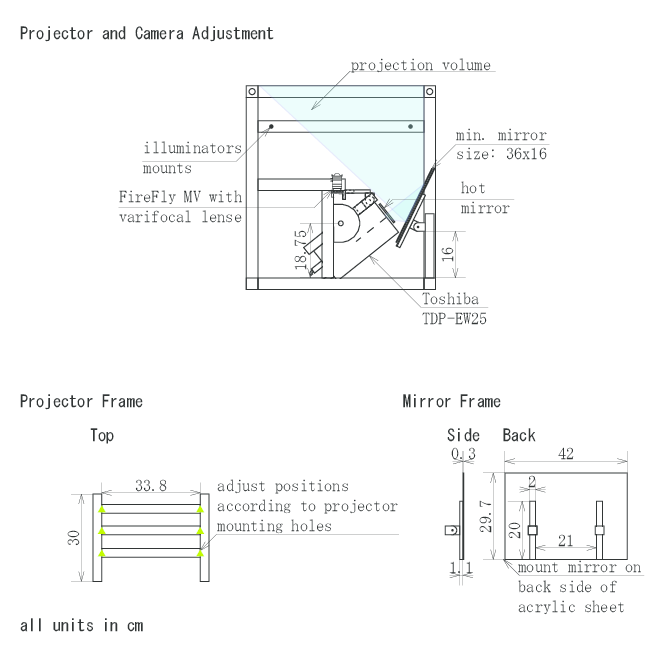

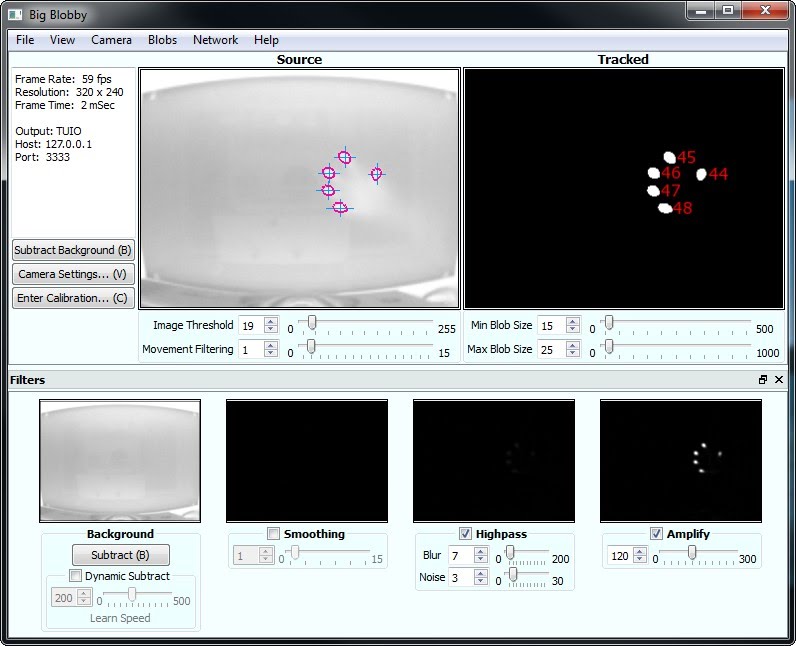

Touch sensing on large surfaces is an unsolved scaling problem. Capacitive grids become prohibitively expensive beyond tablet size. Camera-based systems require controlled lighting. The Laser Light Plane (LLP) approach takes a different route: two infrared lasers mounted at opposing edges of a surface create a uniform light plane roughly 1mm above the glass. When a finger or object breaks the plane, the scattered IR light is captured by a camera below the surface, triangulated into coordinates, and broadcast as TUIO touch events.

The system works at any scale – from a 20-inch prototype to a 6-foot interactive table – because the sensing mechanism is geometric, not material. The IR plane does not care about surface size; it only needs line-of-sight from the lasers. Detection runs in openFrameworks, with TUIO events consumed by Flash AS3 applications or custom renderers.

Variants

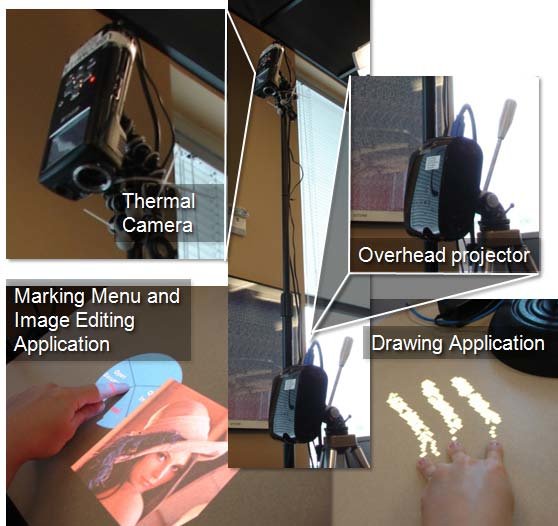

The transparent display variant uses prism-based rear projection onto glass, creating a see-through surface that responds to touch. Objects behind the glass remain visible while interactive graphics overlay them – an early form of spatial AR without a headset. This line of work informed later research at the Media Lab on augmented transparent surfaces (see Glassified).

Ballshooter

Ballshooter itself is a tangible game built on these sensing primitives: a physical version of Space Invaders where the display is a transparent LCD with its backlight removed, hand tracking comes from a Leap Motion sensor, and the game objects exist in the same visual plane as the real world behind the screen. The project was less about the game and more about proving that commodity IR hardware could turn any flat surface into a responsive, multi-touch input device.

Video — Multi-touch Demo

Video — See-through Display

See-through transparent displays and interaction. Prism-based projection screen developed at MIT Media Lab, with UROP Meghana Bhat.

Developed during internship at IIT-Delhi for Design Degree Show 2009.

Video — Ballshooter Game

Backlight-removed transparent LCD with Leap Motion tracking. In team with Rahul Motiyar, Rajdeep, and Aman, IIT Bombay.